Why Sponsor Oils? | blog | oilshell.org

Yesterday's post gave different views on Shell Scripts As Executable Documentation. I tagged it #shell-the-good-parts and #comments.

This post has the same two tags. It shows examples of a multiprocess architecture for multimedia:

I would call this reuse by versionless protocols, as opposed to reuse by fragile libraries. (Related: my comment about textual protocols, which I'll elaborate on in the future.)

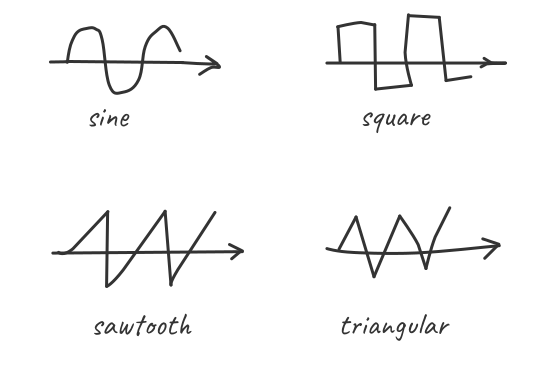

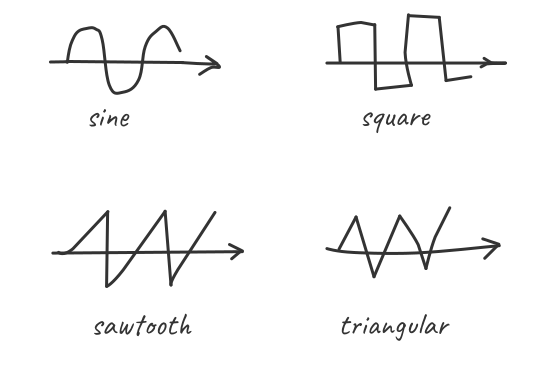

How to create minimal music with code in any programming language (zserge.com via Hacker News)

265 points, 78 comments - 67 days ago

Summary: you can generate music by piping raw samples into programs like

mplayer or ffplay.

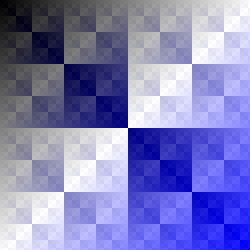

Echo/printf to write images in 5 LoC with zero libraries or headersnetpbm (vidarholen.net via Hacker News)

129 points, 23 comments - 61 days ago

With the Netpbm file formats, it’s trivial to output pixels using nothing but text based IO

In this case, the protocol / generated format is text rather than binary.

Are the above techniques silly hacks? Isn't the better solution to use a "proper" library?

No, you can render professional quality computer graphics with this architecture. My 2018 Recurse Center project taught me this:

I wanted to work with graphics rather than text, but I still ended up making use of shell. Why?

Links:

I touched on the relation between Unix and the web in this February 2020 episode of #shell-the-good-parts. Summary:

Now that we've seen some Unix-y examples of audio, images, and 3D graphics, we can add to the analogy:

In a sense, you're reusing the web browser to provide a rich experience to visitors.

Importantly, you can write the web server in any language, which means that this Unix- and web-style of multiprocess programming enables language diversity.

This is good news to designers of nascent languages, e.g. on

/r/ProgrammingLanguages! You

don't have to write a "binding" to do fun things with a language. You can

print data to stdout or write a web server.

An esoteric raytracer in CMake made the rounds recently. I suggested that this program can also be expressed in shell, since shell naturally supports multiple processes for parallel rendering, just as we did with PBRT.

My Comment on

Ray Tracing in pure CMake (64.github.io via Hacker News)

292 points, 166 comments - 15 days ago

I got a couple great responses!

sqrt(), in

math.sh.Important: These pieces of code are different than the ones above! They are more "esoteric" because doing math in shell is slow.

However, I hope to add floating point to the Oil language in the near future, as well as a richer notion of functions. Along with Oil's more familiar syntax, raytracing in shell might not be esoteric!

Ad: I need help on Oil! Just running OSH on existing shell scripts is useful, and there are many other ways to help.